Introduction of Video Streaming Technologies

History of Video Streaming Technology

With the growth of the Internet, digital video technology has been used as a matter of course for various businesses in various scenarios, and it has created various histories.

Since the 2000s, various video streaming technologies had been developed by various companies Those video streaming technologies were developed using its own protocol and its own server/client technologies, and it was sometimes difficult to apply to the businesses because it was necessary to be familiar with each of technologies. With these technologies, the quality of the playback video and audio could be controlled by both the client and the servers via their communication link, but some network environments such as over routers and network address translation (NAT) made the application with the technologies difficult.

In the late 2000s, there were strategic activities to accelerate harmonization between HTTP technology and the video streaming, and various companies developed HTTP-based adaptive streaming video delivery technology. That HTTP-based adaptive streaming has deprecated the above existing video streaming technology and updated the video streaming world to HTTP.

Normally, when playing a video file via simple HTTP download, both video downloading network bandwidth and video quality will be the critical factors to playback the video smoothly, even if the video can be played as it is being downloaded.

In short, if the video quality (video data size per second) is larger than the downloading network bandwidth, the video playback cannot keep up and the video will stop in the middle.

A technology called adaptive streaming attempts to solve this problem.

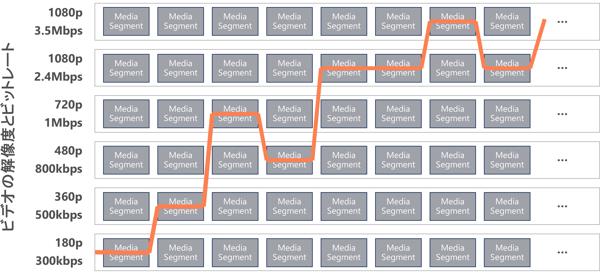

Adaptive streaming is based on splitting a video file into multiple segments and downloading and playing each of segmented video files. A single video segment is, for example, a 2 to 10 second length of video, and by preparing a sequence of these video segments for each of different qualities (bitrate) and resolutions, video player is able to choose appropriate quality and/or resolution of video segments to play and switch between qualities and/or resolutions adaptively, depending on the speed and state of the connection between the video web server and the video player.

With this technology, the playback client can play back with switching the quality of the video during playback according to the bandwidth of network connection, and the video can be played without stopping and/or stalling.

In addition, since it is HTTP-based streaming, you can use CDNs (Content Delivery Networks) with HTTP caching to deliver video segment files to large audiences with massive scalability, while minimizing the load increases on your streaming servers.

Adaptive Streaming

For adaptive streaming, three streaming technologies were developed at the time around 2009, each supporting both live and video-on-demand video delivery scenarios, each with its own dedicated client player.

- Adobe HTTP Dynamic Streaming (HDS)

- Apple HTTP Live Streaming (HLS)

- Microsoft Smooth Streaming

HTML did not define <video> or <audio> tag at the time. Media such as videos and audios, known as rich media, had to be played back in player implementations in browser plugins. For this reason, the service providers had to support all of these three streaming in order to deliver contents to large audiences as much as possible.

With the spread of mobile devices and multi-devices, the standardization of video streaming technologies and client technologies have become an issue, and various companies have contributed to the development of common standard video streaming technology and client platform.

In the standardization of video streaming technology, MPEG-DASH (Dynamic Adaptive Streaming over HTTP) was established as the first international standard in 2012 for the standardization of video streaming technology. Microsoft and Adobe contributed to the development of this MPEG-DASH technology based on their technology and experiences.

In addition, in an organization called DASH Industry Forum established by Akamai, Ericsson, Microsoft, Netflix, Qualcomm, and Samsung as founding members, the organization is trying to popularize the standardized MPEG-DASH technology while solving actual compatibility problems and creating guidelines for recommendations in the market.

Besides MPEG-DASH, Apple had submitted their HLS technology to the IETF (Internet Engineering Task Force) as an RFC draft in 2009 when it was first developed, and finally HLS was announced as RFC8216 in 2017 .

For the client standardization, W3C introduced <video> / <audio> tag into HTML5, and a technology called Media Source Extension (MSE) was developed by Google/Microsoft/Netflix as a media extension for HTML5. As the results, MPEG-DASH format video playback can be achieved with a player implemented in JavaScript.

The current of Video Streaming

Currently, two technologies, MPEG-DASH and HLS, are used in many services and applications as the adaptive streaming technology.

- ISO/IEC 23009-1 - MEPG-DASH (Dynamic Adaptive Streaming over HTTP)

- IETF RFC 8216 HTTP Live Streaming (HLS)

For playback of these streaming technologies, on PCs (Windows/macOS), JavaScript players using HTML5 Media Extensions APIs can be used on various browsers which support HTML5 Media Extensions, while on mobile devices, such as Android and iOS operating systems, a player developed with standard APIs or extended components on those operating systems and/or platforms, can be used for playback of adaptive streaming.

For a JavaScript-based player in browser environments, the following open source players are widely used in the market.

Players on Apple operating systems are often developed using a native platform called AVPlayer that supports HLS streaming.

Players on Android operating systems often uses the ExoPlayer open source player platform developed by Google, which supports both HLS and MPEG-DASH streaming.

The THEOplayer commercial player SDKs that we handle are typically based on the a above basic functionalities and/or player functions in operating systems, and adds various additional advanced features to make your player easy for developing and valuable for more various scenario support.

Video Streaming Platform

As mentioned above, there are still two formats of video streaming technology used in the market, and platforms that perform video streaming generally need to support both streaming formats. Therefore, some streaming platforms have the ability to dynamically generate and output two streaming formats in real time.

This dynamic stream generation, commonly referred to as “just-in-time packaging”, is applicable to both live and on-demand streams, and as a result, you can build a streaming architecture with simpler workflow.

The product of Wowza Media Systems, which we handle, is one of the streaming platforms shown above. It supports various input formats, performs dynamic packaging of MPEG-DASH and HLS streams from the inputs for your video streaming over the Internet.

Closing

In this article, we briefly introduced the history of streaming technology and the technologies around it.

Digital video and audio, and streaming technologies are evolving year by year, and it is important to catch up with those latest technologies and products/solutions that support them, when building your streaming systems and services. We are working with global media companies to support our customer projects and services, while keeping up to date with those latest technologies.